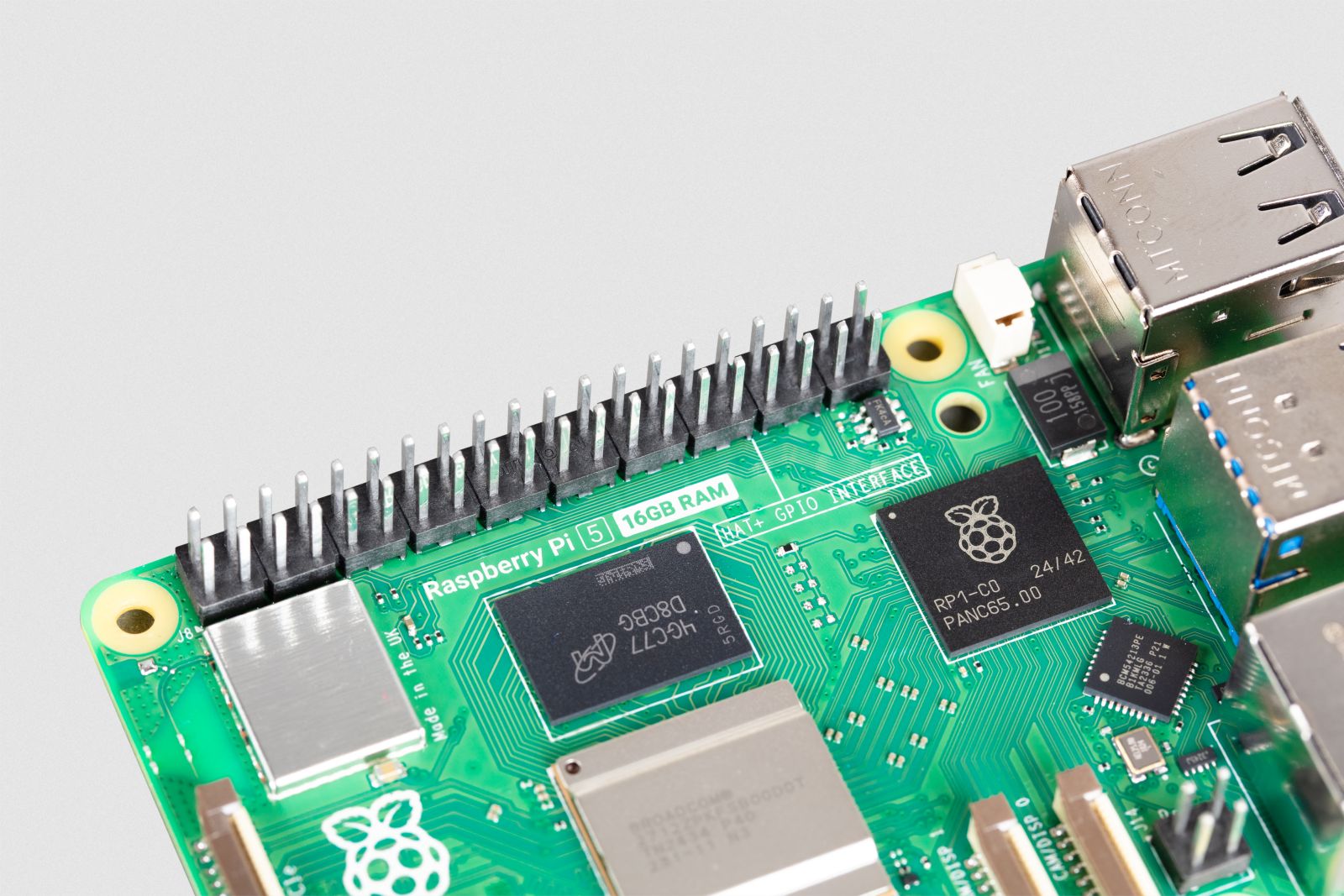

Imagine having the power of advanced language models - once limited to massive data centers - running directly on a compact device like the Raspberry Pi 5 (16GB). That's the promise of local inference with DeepSeek, a first-generation reasoning model series known for delivering performance comparable to OpenAI's o1. In this post, we'll explore how to get started with DeepSeek, compare it to alternatives like ChatGPT and Llama, discuss why specialized hardware like HAILO might not be the best fit for large language models (LLMs), and walk you through the installation process step by step. Whether you're an enthusiast or an edge AI developer, you'll find the technical details you need right here.

What Is DeepSeek?

DeepSeek is a collection of reasoning models designed for tasks like math, coding, and general-purpose language understanding. The DeepSeek-R1 series (licensed under MIT) supports commercial use and can be fine-tuned, distilled, or integrated into various workflows. Each model in this series is optimized for different hardware configurations - ranging from ultra-compact boards such as the Raspberry Pi 5 to high-end GPUs on desktop systems.

DeepSeek vs. ChatGPT vs. Llama

- ChatGPT: A popular closed-source, cloud-hosted solution by OpenAI, typically accessed via an API or web interface. It offers high-quality responses but requires an internet connection and can incur usage costs.

- Llama: Meta's open-source LLM family that aims to bring large language model capabilities to local environments. While it offers multiple model sizes, memory requirements can still be significant, especially on smaller devices.

- DeepSeek: Provides a balance of local inference and smaller model sizes (e.g., 1.5B, 7B, 8B) by distilling larger models into smaller footprints without losing significant performance.

Why run local LLMs at all? You retain data privacy, reduce latency, and avoid recurring API fees. However, you must manage hardware resources and face potential slowdowns, especially with very large model sizes.

Why HAILO Might Not Be a Great Fit

The HAILO chip is specifically optimized for convolutional neural networks (CNNs), which excel in tasks like image recognition or basic computer vision. Large language model inference, however, often involves massive matrix multiplications and attention layers not as closely aligned with CNN-focused architectures. As a result, HAILO's edge accelerators may not deliver the token throughput needed for LLMs, making general-purpose CPUs/GPUs on devices such as the Raspberry Pi 5 more practical for text-based inference.

Step-by-Step: Installing DeepSeek on Raspberry Pi 5 (16GB)

-

Update System

sudo apt-get update && sudo apt-get upgrade -yEnsures you have the latest packages and kernel updates.

-

Install Dependencies

sudo apt-get install -y curl git build-essentialThis makes sure that the tools needed to build and run the necessary libraries are installed. Normally they are already installed on a fresh Raspberry Pi OS installation.

-

Install Ollama

Ollama is a command-line utility that simplifies working with local LLM models. Install it with the following command:

curl -fsSL https://ollama.com/install.sh | sh -

Download DeepSeek-R1 Model

Visit the official DeepSeek-R1 Ollama page and choose your model or run:

ollama run deepseek-r1:1.5bTo run the 7 billion parameter model, which strikes a good balance between performance and quality. Wait for the weights (up to several GB) to download. This can take several minutes.

-

Test Your Setup

Once the download completes, you can chat with your model in the terminal. What a time to be alive!

Performance and Inference on Raspberry Pi 5 (16GB)

| Model | Model Size (Parameters) | Model Size (GB) | Tokens/s |

|---|---|---|---|

| DeepSeek-R1-Distill-Qwen-1.5B | 1.5 billion | 1.1 GB | ~9.58 |

| DeepSeek-R1-Distill-Qwen-7B | 7 billion | 4.7 GB | ~2.23 |

| DeepSeek-R1-Distill-Qwen-14B | 14 billion | 9.0 GB | ~1.2 |

| Llama3.1:8B | 8 billion | 4.9 GB | ~2.17 |

Real-world usage includes chat-style Q&A, code snippet generation, and simpler creative tasks. DeepSeek's distilled models aim to match or exceed Llama's throughput at similar or smaller sizes.

Running a small model like DeepSeek-R1-Distill-Qwen-1.5B on a Pi 5 yields 9.58 tokens/s, which is a good starting point for a local inference setup. And while it's not blazingly fast, it still provides a decent experience for occasional use and smaller projects and can be a useful alternative to subscribing to a cloud-based service like OpenAI in development and experimentation contexts.

Building Edge AI with DeepSeek

With DeepSeek running on a local Pi 5, you can embed LLM capabilities anywhere - without relying on cloud connectivity. Some use cases include:

- On-Device Chatbot: For offline customer service or kiosk systems.

- IoT Control: Natural language interfaces for smart devices in factories or smart homes.

- Educational Tools: Interactive tutoring or language assistance in remote areas without consistent internet access.

- Robotics & Automation: Real-time decision-making with minimal latency.

FAQ

-

Which models are compatible with Pi 5?

You can run the smaller (1.5B to 15B) DeepSeek-R1 models on Raspberry Pi 5, but performance varies by model size. For best results, start with 1.5B or 7B variants. -

Is there any certification required to deploy on the Pi 5?

Generally, no. Raspberry Pi boards are CE certified, and the DeepSeek software is MIT-licensed, so you can integrate it into commercial products without special licensing fees. -

Does DeepSeek support integration with third-party apps?

Absolutely. DeepSeek works with Ollama's CLI utility and can be called from Python, Node.js, or other environments. -

Can I fine-tune DeepSeek models on the Pi 5 itself?

It is theoretically possible but will be time-consuming. Many developers prefer to fine-tune on more powerful hardware and then deploy the final model to Pi 5, which is what we recommend as well. -

How to monitor power and temperature?

Use commands likevcgencmd measure_tempfor the CPU temperature and a watt meter for power consumption. The Pi 5's temperature operating range typically remains safe for continuous inference if properly ventilated.

Conclusion

Running advanced language models locally on the Raspberry Pi 5 is no longer just an experiment. With DeepSeek's compact and efficient models, you can achieve near-cloud quality reasoning while keeping data on-premises. Whether you're developing a standalone chatbot or integrating AI into IoT devices, local inference offers privacy, minimal latency, and long-term cost savings.

If you're ready to explore next-level edge AI, download DeepSeek-R1 and fire up Ollama on your Raspberry Pi 5. For personalized guidance, don't hesitate to contact us to discuss your requirements and let us guide you toward the optimal edge AI solution. Happy building!

Resources

- DeepSeek GitHub Repository – Download models, view release notes, and explore documentation.

- Ollama Documentation – Learn how to manage and run LLMs locally.

- Benchmark Script – Compare performance across various models and hardware setups.