In a world increasingly driven by real-time insights, many businesses are turning to edge AI solutions to meet performance and security requirements close to where data is generated. This post provides an introduction to Axelera AI and its Metis AI acceleration hardware and software, written for business customers seeking practical AI at the edge—cost-effective, easy to integrate, and robust enough for demanding applications.

Axelera AI's mission is to simplify AI acceleration for businesses of all sizes, offering modular inference-focused hardware that fits into familiar system form factors. These solutions aim to make it easier for integrators, OEMs, and developers to deploy vision-based applications anywhere—from small industrial PCs to comprehensive data center servers.

Key Concepts

- AI Inference: The process of applying a trained machine learning model to new data. At the edge, inference needs to be low-latency and efficient.

- TOPS: Stands for “Tera Operations per Second.” Higher TOPS can indicate greater throughput for tasks like image classification.

- M.2 & PCIe Form Factors: Standards for connecting hardware, commonly used due to small footprint and high data throughput.

- RK3588 Processor: A multi-core ARM-based processor known for balancing performance with low power consumption—useful for edge devices.

Axelera AI's Edge Inference Hardware

Axelera AI offers multiple hardware form factors to match diverse deployment scenarios. Below are some details that might help you decide which best fits your needs.

M.2 AI Edge Accelerator Card

- Core Specification: Powered by a quad-core Metis AIPU with 1 GB of dedicated DRAM. Typical power consumption is designed to stay below 10W in many edge scenarios.

- Form Factor & Integration: Slides into an NGFF (Next Generation Form Factor) M.2 socket, widely supported in industrial motherboards and embedded systems.

- Highlights: Enables AI processing for resource-constrained environments, useful for tasks like object detection in small enclosures.

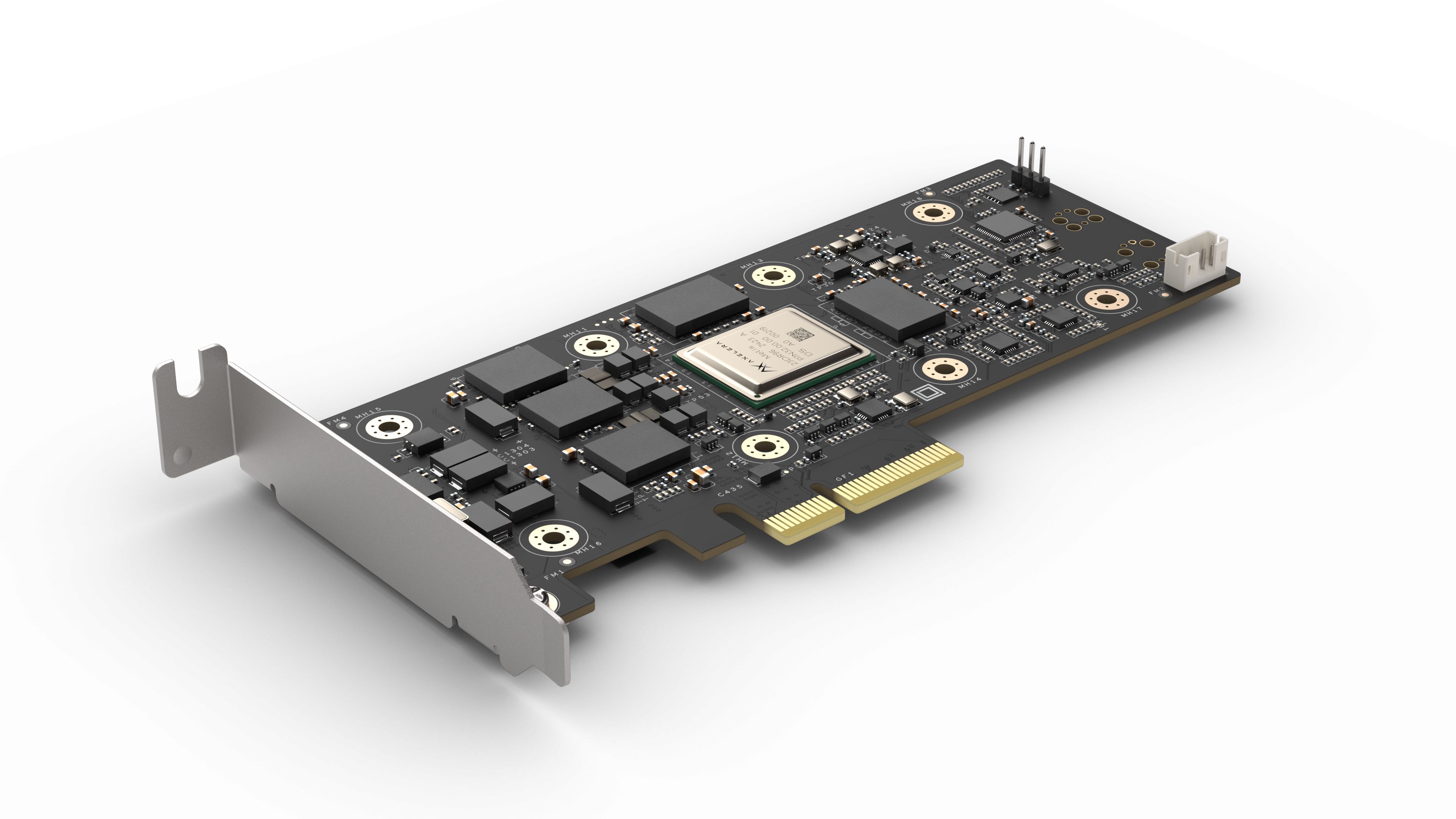

PCIe AI Accelerator Card (Single Metis AIPU)

- Core Specification: Up to 214 TOPS of AI compute, handling up to 3,200 FPS on ResNet-50 under tested conditions.

- Form Factor & Integration: Uses a PCIe interface (e.g., x4 or x8 lane). Can be installed in desktops, servers, or embedded racks.

- Certifications & Operating Conditions: Check official product briefs for details on CE, FCC, and operating temperature ranges.

PCIe AI Accelerator Card (4 × Metis AIPUs)

- Core Specification: Delivers up to 856 TOPS. Tested benchmarks indicate up to 12,800 FPS on ResNet-50 or 38,884 FPS on MobileNet V2-1.0.

- Primary Use Case: High-volume or multi-channel video analytics, advanced robotics, or real-time object detection across multiple streams.

- Performance Consideration: More demanding workloads might require additional cooling; still maintains lower power usage relative to many GPU-based solutions.

Metis Compute Board with RK3588 (SBC)

- Core Specification: A single-board computer (SBC) integrating a quad-core Metis AIPU, an RK3588 SoC, 64GB eMMC, and flexible storage/IO options.

- I/O & Connectivity: Offers four USB ports, HDMI 2.0, dual Gigabit LAN, GPIO, UART, I2C, and a 4-lane PCIe connection for the onboard AIPU.

- Ideal Applications: Suited for multimodal processing, robotics, or standalone systems requiring a compact form factor and integrated AI capability.

The Voyager SDK and Software Ecosystem

Axelera AI's hardware lineup is complemented by the Voyager SDK, designed to reduce friction and optimize performance:

- High-Level Deployment: Build end-to-end inference pipelines (pre-processing, model execution, post-processing) using a YAML-based approach.

- Model Zoo: Access pre-optimized models for classification, detection, segmentation, etc. Can be fine-tuned or seamlessly integrated into PyTorch, TensorFlow, or ONNX workflows.

- Quantization & Optimization: Maintains near-FP32 accuracy even after compression. This ensures minimal performance drop with maximum power savings.

- Host Integration: Compatible with a variety of operating systems and CPU architectures. Includes GStreamer plugins for multi-stream video pipelines.

Comparative Analysis

| Feature | M.2 Accelerator | PCIe (Single AIPU) | PCIe (4 × AIPU) | Metis Compute Board (RK3588) |

|---|---|---|---|---|

| Max TOPS | ~214 (quad-core) | 214 | 856 | 214 (quad-core inside SBC) |

| Form Factor | M.2 (NGFF) | PCIe x4/x8 | Likely PCIe x8 | Single-Board Computer |

| Power Consumption (typ.) | <10W typical | <10W typical | May vary up to 10-30W | <15W region (SBC + AIPU) |

| Major Use Cases | Embedded, compact builds | High-throughput for servers | Multi-channel, large-scale | Standalone Edge system |

| Example Throughput (ResNet-50) | ~3,200 FPS | ~3,200 FPS | ~12,800 FPS | ~3,200 FPS |

Note: These metrics are approximations. Actual results may vary based on workload and system configuration.

Frequently Asked Questions

Q1: Which AI frameworks does Axelera AI support?

A1: The Voyager SDK natively supports models from frameworks like PyTorch, TensorFlow, and ONNX. You can use Axelera's Model Zoo or bring your own custom-trained models.

Q2: Are these cards certified for industrial use?

A2: Many of Axelera AI's products are designed with industrial certifications (CE, FCC, etc.) in mind. For exact temperature ranges and compliance details, refer to the official product brief.

Q3: What if I need more performance in the future?

A3: You can add additional PCIe cards or move to a multi-AIPU version. The underlying software stack remains the same, keeping your upgrade path straightforward.

Q4: Can I run non-vision workloads (e.g., NLP)?

A4: While the primary focus is computer vision, some NLP or non-vision tasks may work. Check product benchmarks or contact Axelera AI for specialized applications.

Q5: Do I need specialized cooling?

A5: Under typical edge workloads, a standard system fan or passive cooling solution usually suffices. High-load or multi-AIPU use may call for additional airflow.

Resources and 3rd Party Support

- Step-by-Step Deployment Guides – official documentation, application notes, and tutorials.

- Voyager SDK on GitHub – reference code, sample pipelines, and community contributions.

- Additional integrations: OpenCL & SYCL via oneAPI Construction Kit, GStreamer pipelines, and Docker images for container-based deployments.

Real-World Use Cases

- Autonomous Robotics: Running multiple cameras for object detection in real time on warehouse floors.

- Smart Retail: Monitoring shelves for out-of-stock products and preventing theft with real-time detection.

- Automated Inspection: Identifying defects on production lines at high speed and resolution.

- Surveillance & Safety: People detection, hazard monitoring, and anomaly detection to maintain safe environments.

Potential Limitations

- Specialization: Though CNN-based applications run seamlessly, extremely large NLP or generative models might require extra tweaking or different acceleration approaches.

- Evolving Software: The Voyager SDK is continuously updated, so staying current ensures the best optimization and feature set.

Conclusion

Axelera AI delivers a range of edge-focused AI accelerators that integrate neatly into standard industrial and enterprise environments. Whether you need a low-profile M.2 module or a multi-AIPU PCIe card for high-volume computer vision, the Metis platform pairs with the Voyager SDK to make deployment straightforward.

If you're looking to reduce latency, optimize power consumption, or simply simplify your AI rollout, Axelera's solutions balance performance, efficiency, and cost. For more specialized questions or a detailed performance review, consider contacting Axelera AI or exploring the product inquiry page.

Ready to get started?

For personalized guidance, don't hesitate to contact us to discuss your requirements and let us guide you toward the optimal edge AI solution.