Supercomputers in the age of AI: Why we need more computing power

Supercomputers have traditionally represented the pinnacle of computing power—machines that fill entire halls and were previously used only in national research centers or for highly complex simulations. But with the breakthrough of artificial intelligence (AI) and large language models (LLMs), the picture has changed. Today, not only universities but also companies require access to massive computing power.

Training and deploying models with hundreds of billions of parameters poses challenges even for large cloud infrastructures. At the same time, the need to run AI systems on-premises is growing, whether for data protection reasons or to better control costs and latency.

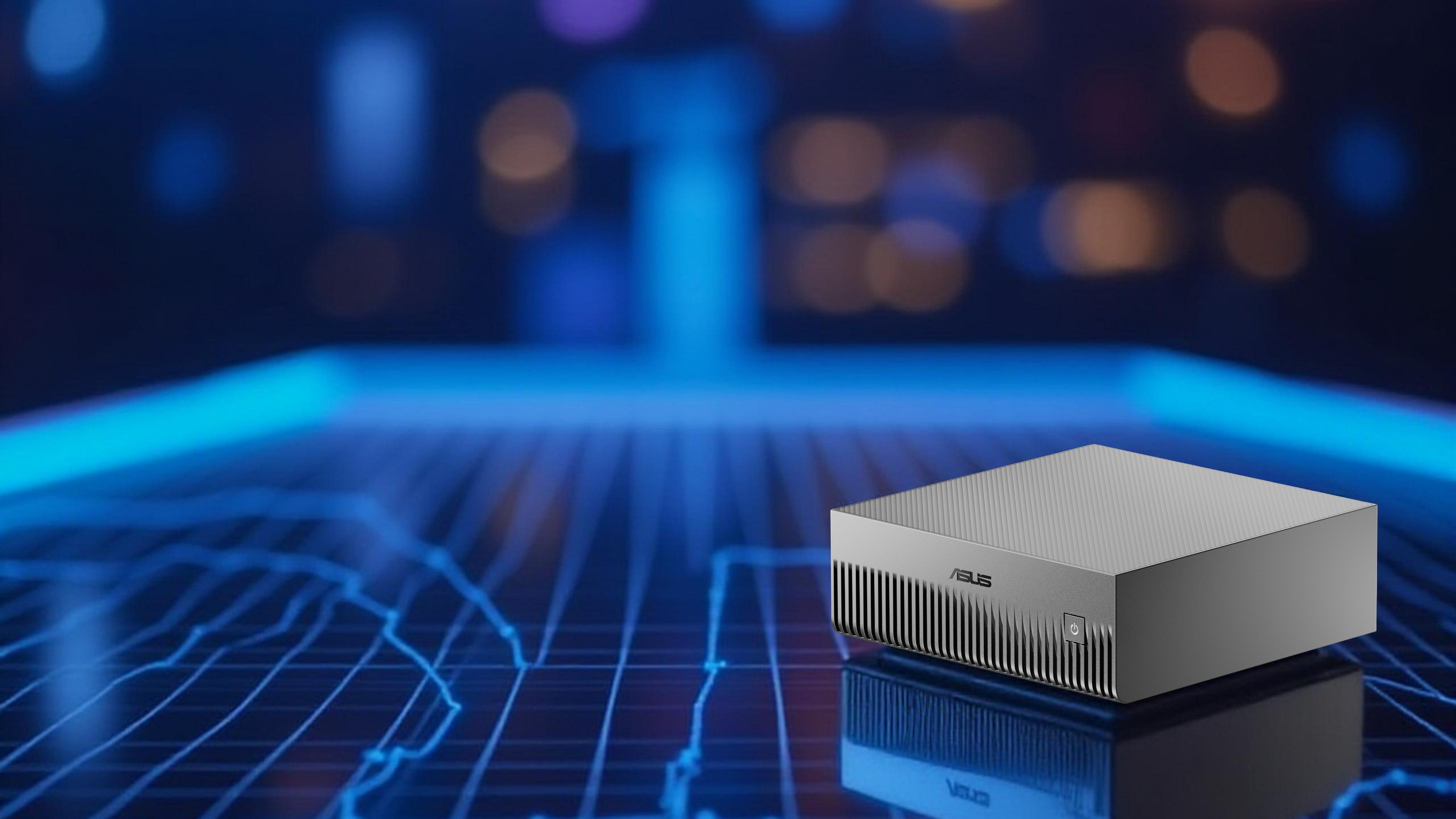

With the ASUS Ascent GX10 , also known as NVIDIA DGX Spark , ASUS is entering a new era of compact supercomputing: a desktop system that brings supercomputer power to the desk – specifically for AI development, training and fine-tuning of open LLMs.

Performance, storage & network – the power of the GX10 in detail

At the heart of the ASUS Ascent GX10 is the NVIDIA GB10 Grace Blackwell Superchip – a tight integration of an ARM v9.2-A CPU and Blackwell GPU, designed specifically for AI workloads. This architecture combines high computing performance with energy efficiency and extremely short data paths – ideal for deep learning tasks, LLM fine-tuning, and generative AI applications.

The GX10 delivers up to 1 PFLOP of AI computing power (FP4, sparse), making it suitable for training, fine-tuning, and executing large open models such as:

-

Meta LLaMA 3

-

Mistral 7B / Mixtral

-

Falcon 180B

-

OpenHermes / OpenChat.

In addition to these established open-source models, open OpenAI models and community projects can also be run locally. When two GX10 systems are coupled, models with up to 400 billion parameters are possible.

In contrast to closed models such as GPT-4, which cannot be executed locally due to their inaccessible parameter architecture, open models offer full control over data, fitting, and fine-tuning.

The GX10 features 128 GB of LPDDR5x unified memory and up to 4 TB of NVMe SSD storage (PCIe Gen5 x4) . This allows for efficient management of large data sets, even in continuous production operation.

High-performance network connectivity is guaranteed via 10G LAN, Wi-Fi 7, Bluetooth 5 and ConnectX CX-7.

A powerful advanced thermal design ensures stability and quiet operation – even under continuous load.

Measuring just 150 × 150 × 51 mm and weighing 1.48 kg, the GX10 brings real supercomputer power to desktop format.

Technical data

|

component |

ASUS Ascent GX10 (NVIDIA DGX Spark) |

|

Superchip |

NVIDIA GB10 Grace Blackwell |

|

CPU |

ARM v9.2-A (12 cores) |

|

GPU |

NVIDIA Blackwell (integrated) |

|

memory |

128 GB LPDDR5x Unified Memory |

|

Storage |

1TB / 2TB / 4TB NVMe SSD (PCIe Gen5 x4) |

|

network |

10G LAN, Wi-Fi 7, Bluetooth 5, ConnectX CX-7 |

|

cooling |

Liquid cooling (optimized for continuous operation) |

|

Dimensions |

150 × 150 × 51 mm |

|

Weight |

1.48 kg |

|

Operating system |

NVIDIA DGX Base OS (Ubuntu Linux) |

|

I/O ports (rear) |

3× USB-C (20 Gbps), 1× USB-C PD (180 W), 1× HDMI 2.1, 1× 10G LAN, 1× CX-7 |

Click here for the official ASUS Ascent GX10 datasheet (PDF)

With these specifications, the ASUS Ascent GX10 clearly positions itself between traditional desktop systems and large computing clusters like the NVIDIA DGX series. To put its performance into perspective, it's worth comparing it with the DGX H100 and typical cloud instances:

|

criterion |

ASUS Ascent GX10 (NVIDIA DGX Spark) |

NVIDIA DGX H100 |

Cloud (AWS/Azure/Google) |

|

Computing power (AI FP4) |

up to 1 PFLOP (sparse) |

approx. 0.5–0.7 PFLOP (FP16) |

Variable (depending on instances) |

|

Maximum model size |

up to 400B parameters (for 2 systems) |

up to ~175B parameters |

Any – depending on costs |

|

Energy efficiency |

Very High (Grace Blackwell) |

High (amperes) |

Medium – high |

|

Data sovereignty |

Completely on-premises |

Completely on-premises |

External data storage |

|

Investment costs |

High (one-time) |

Very high (one-time) |

None (Pay-as-you-go) |

|

Running costs |

Low (power & cooling) |

Low (power & cooling) |

High (running) |

|

Scalability |

Very good – 2 systems can be coupled |

Good – cluster possible |

Very high – cloud scaling |

Compact, robust and efficient: design of the GX10

The ASUS Ascent GX10 is not a classic rack server, but a compact desktop supercomputer that can be easily integrated into existing work environments thanks to its form factor of only 150 × 150 × 51 mm – without the need for a data center environment.

Despite its small size, the GX10 is designed for continuous operation under full load: A powerful liquid cooling system ensures that the system remains stable, quiet, and energy-efficient even under intensive AI workloads.

A key feature is scalability . Two GX10 systems can be coupled to train larger models or handle complex inference tasks. ASUS thus offers a modular solution that keeps pace with growing demands—a decisive advantage for research institutions or companies with a long-term AI strategy.

The GX10 is also future-proof when it comes to connectivity: PCIe Gen5, 10G LAN, Wi-Fi 7, Bluetooth 5 and the ConnectX CX-7 SmartNIC ensure fast data connections and seamless integration into existing networks.

The system comes with NVIDIA DGX Base OS , pre-configured AI frameworks (e.g., PyTorch, TensorFlow, Hugging Face), and remote management tools, so it's ready to use right out of the box.

From research to business: Where the GX10 shows its strengths

The ASUS Ascent GX10 is designed for a wide range of use cases, from scientific research to mission-critical AI workloads.

In research, the GX10 enables the training and fine-tuning of complex models – for example, for natural language processing, genomics, or simulations in physics. Universities and institutes particularly benefit from being able to process data locally without relying on cloud services.

Companies can also implement AI projects internally with the GX10 – whether building agentic AI systems , automating business processes , or developing customized LLMs . The system's combination of computing power, energy efficiency, and data protection makes it ideal for environments where sensitive information cannot leave the premises – such as healthcare, financial services, or public administration.

Last but not least, the GX10 impresses with its modular scalability: Those who start small can later expand their system by connecting several GX10 units, thus gradually building an on-premises AI computing cluster – with full data sovereignty and predictable costs.

Who benefits from the GX10 – and what are its limitations?

With the ASUS Ascent GX10, ASUS has set a new standard in compact AI supercomputing. The NVIDIA GB10 Grace Blackwell superchip combines extreme computing power with high energy efficiency, offering a viable alternative to cloud or large-scale systems.

The GX10 is particularly suitable for research institutions, startups, and companies that want to train or operate their own AI models without outsourcing data. It's also ideal for development teams to locally develop and test prototypes, LLMs, or agentic AI applications.

The system only shows limitations where massive cluster workloads or distributed training across dozens of GPUs are required – here the GX10 remains connectable, but does not reach the level of a complete DGX-H100 cluster.

ASUS Ascent GX10 Supercomputer – Prices, Availability & Advice

Want to find out if the ASUS Ascent GX10 is the right choice for your business or research?

Visit our product page and request details .

Alternatively, we also offer individual consulting and support in setting up customized AI infrastructures.